AMD powers Zyphra’s large-scale AI training milestone

Zyphra trains its ZAYA1 foundation model entirely on AMD hardware, marking a major step for large-scale AI development.

AMD has announced that Zyphra has completed training ZAYA1, a new Mixture-of-Experts (MoE) foundation model built entirely on AMD’s GPU and networking platform. The work marks the first time a large-scale MoE model has been trained using AMD Instinct MI300X GPUs together with AMD Pensando networking and the ROCm open software stack.

Zyphra detailed the achievement in a technical report. According to the company, ZAYA1 delivers competitive or superior results across reasoning, mathematics and coding benchmarks when compared with leading open models. The performance demonstrates that AMD’s platform can support production-scale AI workloads that typically rely on rival GPU systems.

Emad Barsoum, corporate vice president of AI and engineering in AMD’s Artificial Intelligence Group, said the milestone highlights how the company’s technology can support modern AI development. “AMD leadership in accelerated computing is empowering innovators like Zyphra to push the boundaries of what’s possible in AI,” he said. “This milestone showcases the power and flexibility of AMD Instinct GPUs and Pensando networking for training complex, large-scale models.”

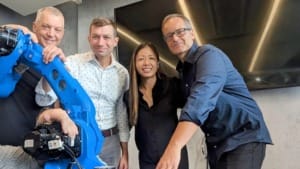

Zyphra’s chief executive Krithik Puthalath said the model reflects the company’s broader focus on efficiency. “Efficiency has always been a core guiding principle at Zyphra. It shapes how we design model architectures, develop algorithms for training and inference, and choose the hardware with the best price-performance to deliver frontier intelligence to our customers,” he said. He added that the organisation is “thrilled to be the first company to demonstrate large-scale training on an AMD platform” and intends to continue working with AMD and IBM as it develops future multimodal foundation models.

Focus on memory capacity and training throughput

Zyphra reported that the MI300X GPU’s 192 GB of high-bandwidth memory played a central role in enabling the model’s training efficiency. The additional capacity allowed the team to avoid the need for expert or tensor sharding, which can add complexity and slow performance. Zyphra added that it achieved more than ten times faster model save times because of AMD’s optimised distributed I/O, which helped improve reliability during large-scale runs.

ZAYA1-Base contains 8.3 billion total parameters, with 760 million active at any given moment. Despite the lower active parameter count, the model matches or exceeds the performance of several well-known systems, including Qwen3-4B from Alibaba, Gemma3-12B from Google, Meta’s Llama-3-8B and OLMoE.

Joint work with AMD and IBM on large-scale infrastructure

The development builds on earlier collaboration between Zyphra, AMD and IBM. Together, the companies designed and deployed a large-scale training cluster that combines AMD Instinct GPUs with IBM Cloud’s high-performance fabric and storage architecture. The system, first announced earlier in the quarter, provided the infrastructure required to train ZAYA1 at scale.

The companies said the engineering partnership enabled Zyphra to run complex pretraining workloads more efficiently, supported by AMD’s hardware platform and IBM’s cloud-native performance architecture.

The ZAYA1 report, together with accompanying updates from both companies, outlines the training approach, model design and AMD technologies used during development. AMD said the milestone reflects growing momentum around its GPU platform as an alternative to well-established competitors in large-scale AI training.