Nvidia’s AI progress outpaces historical computing standards

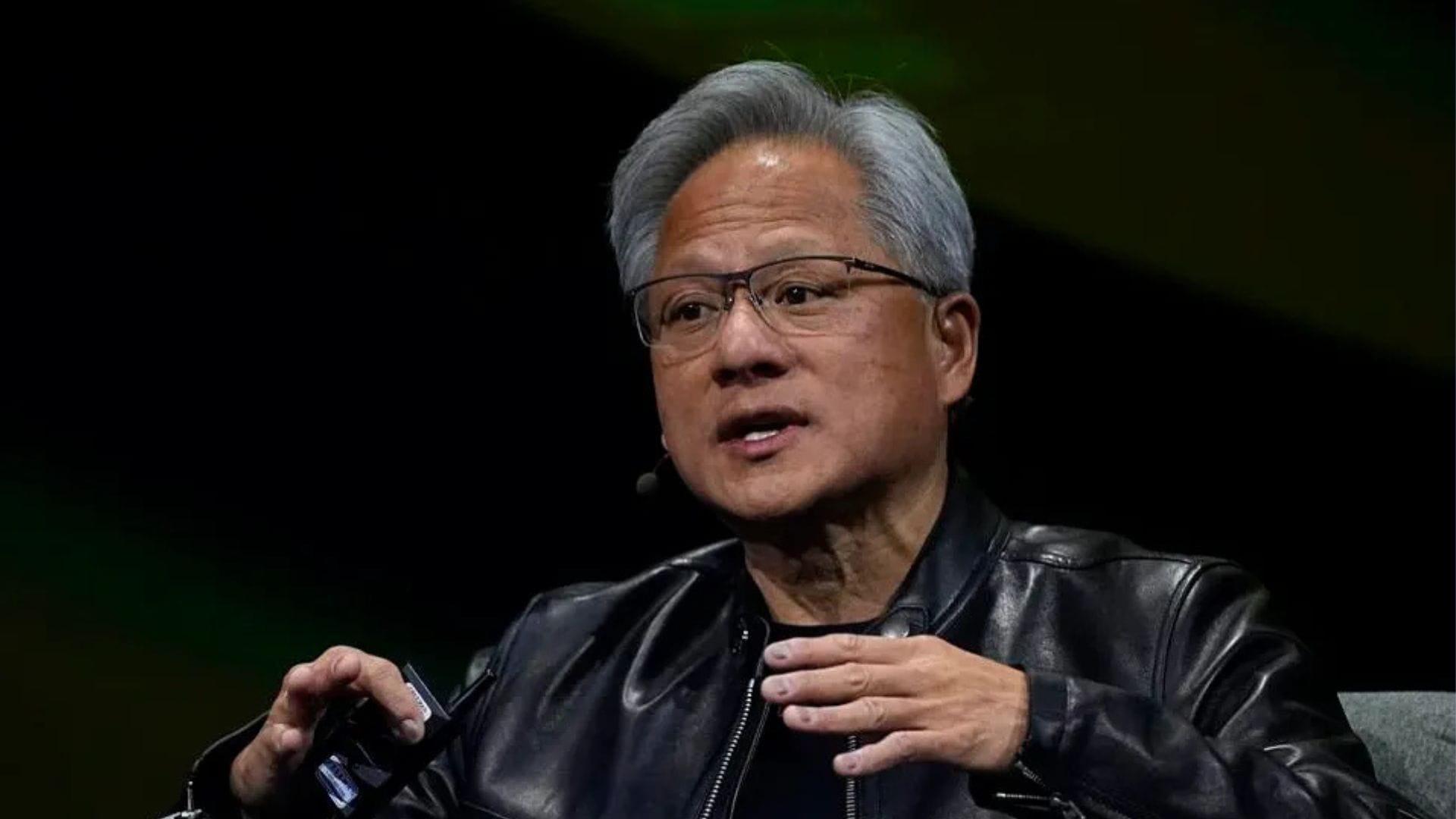

Nvidia CEO Jensen Huang reveals that the company’s AI chips are advancing faster than Moore’s Law, promising cheaper, more powerful AI in the future.

Nvidia CEO Jensen Huang has boldly declared that the performance of the company’s AI chips is advancing at a rate exceeding Moore’s Law. This statement came during an interview on Tuesday, following his keynote speech to a 10,000-strong audience at CES in Las Vegas.

“Moore’s Law is no longer the standard for progress,” Huang said. “Our systems are advancing at a pace far beyond it.”

Introduced by Intel co-founder Gordon Moore in 1965, Moore’s Law famously predicted that the number of transistors on a chip would double roughly yearly, effectively doubling performance. For decades, this forecast drove significant advancements in computing power and affordability. However, as the pace of Moore’s Law slowed in recent years, Nvidia’s innovations have gained momentum.

According to Huang, Nvidia’s latest data centre super chip outperforms its previous generation over 30 times when running AI inference workloads. This achievement reflects the company’s unique approach to innovating across the entire stack—from chip design to system architecture and algorithms.

A new era of AI scaling laws

Huang dismissed concerns that AI progress is stalling, asserting that advancements in chip performance will drive down costs, just as Moore’s Law once did for traditional computing. He introduced three new AI scaling laws shaping the future:

- Pre-training: The initial phase where AI models learn patterns from vast datasets.

- Post-training: Fine-tuning AI models through human feedback and other methods.

- Test-time computing: Give AI models more computational resources during inference to deliver better results.

“While expensive today, test-time computing will become more affordable as we enhance performance,” Huang explained. He cited Nvidia’s latest chip, the GB200 NVL72, as a breakthrough that is 30 to 40 times faster than its predecessor, the H100, in running AI inference workloads. This leap, he claimed, could make AI models like OpenAI’s o3—which currently costs nearly US$20 per task—more accessible in the future.

Nvidia’s long-term vision for AI

While Nvidia’s rise to dominance in the AI hardware market aligns with Huang’s optimistic outlook, there are challenges. Tech companies previously relied on Nvidia’s chips, like the H100, for training AI models. Now, as the focus shifts to inference, there are questions about whether Nvidia’s high-cost chips can maintain their edge.

Huang, however, remains focused on long-term solutions. “The key to making test-time compute both performant and affordable is to keep increasing our computational capabilities,” he said. He also emphasised the potential of AI reasoning models to create better data for pre-training and post-training, further reducing costs.

The impact of Nvidia’s innovations is already visible, with AI model costs dropping significantly over the past year. Huang expressed confidence that this trend will continue, driven by breakthroughs in chip performance.

“Our AI chips are 1,000 times better today than what we made 10 years ago,” Huang said. “We’re not just keeping up with Moore’s Law—we’re surpassing it.”