Disinformation security: Safeguarding truth in the digital age

Discover how AI detection tools, public education, and smart regulations are working together to combat the spread of misinformation online.

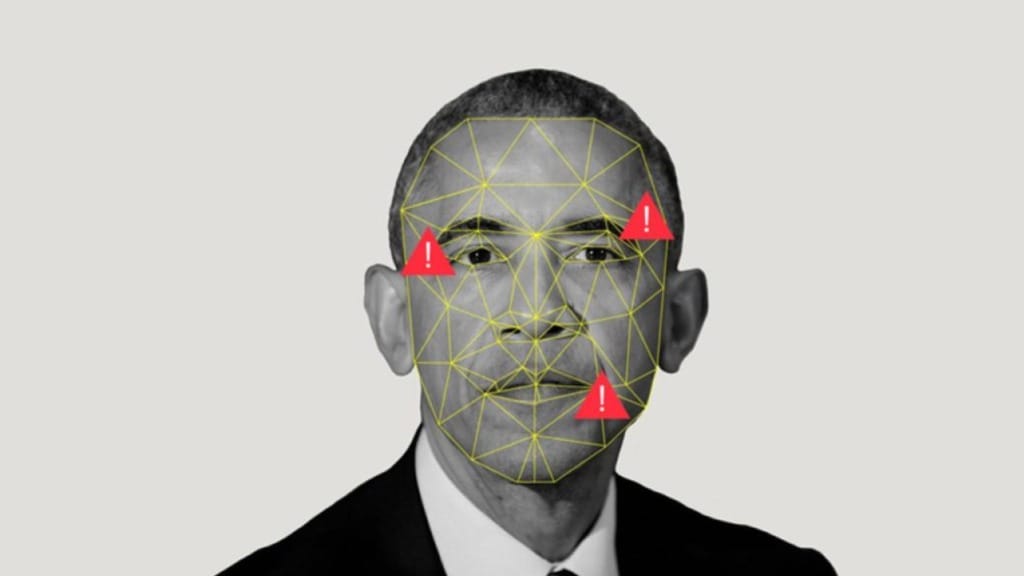

The digital world has become a breeding ground for misinformation, with false narratives spreading faster and more convincingly than ever before. The growing sophistication of artificial intelligence (AI) has amplified this issue, enabling the creation of hyper-realistic fake content, from deepfakes to AI-generated news articles. The line between truth and deception is becoming increasingly blurred, posing a serious threat to public trust, social cohesion, and even national security.

Table Of Content

At the same time, AI offers powerful tools to counteract misinformation. Governments, technology companies, and individuals must collaborate to address this issue through AI-powered detection systems, transparent practices, public education, and effective regulations. This article explores the challenges posed by AI-driven misinformation and outlines key strategies to protect the integrity of information in a rapidly evolving digital landscape.

Understanding the impact of AI on misinformation

Artificial intelligence has transformed how information is created, distributed, and consumed. While it brings efficiency and innovation, it also enables the mass production of fake content with alarming precision. Deepfake technology, for example, can produce realistic videos of people saying or doing things they never did, leading to scandals, misinformation campaigns, and public confusion.

Beyond visuals, AI can generate convincing fake news articles designed to manipulate emotions and drive engagement. These pieces often mimic legitimate journalism, making them harder to detect at a glance. Social media algorithms further compound the problem by promoting sensational content that keeps users engaged, even if misleading or false.

AI-powered bots play another critical role in spreading misinformation. These automated systems can flood social media platforms with fake accounts, amplifying false narratives and making them appear more widespread than they actually are. This tactic overwhelms fact-checkers and creates an illusion of consensus around false claims.

The consequences of unchecked misinformation extend beyond the digital sphere. False health advice can mislead individuals into harmful actions, manipulated narratives can influence election outcomes, and financial misinformation can destabilise markets. The erosion of trust in credible institutions is one of the most damaging outcomes of this growing problem.

To effectively combat these challenges, it’s essential to understand both the capabilities of AI tools and the vulnerabilities they exploit. Only then can meaningful solutions be implemented to limit their misuse.

AI-powered tools to detect and prevent misinformation

While AI contributes to spreading misinformation, it also offers some of the most effective tools to fight back. Advanced AI detection systems are already used across social media platforms and fact-checking organisations. These systems can scan vast amounts of text, images, and videos, identifying patterns or inconsistencies that indicate false or manipulated content.

Natural language processing (NLP) tools are particularly effective for analysing written content. These systems compare claims against verified sources, flagging potential inaccuracies for human reviewers. Platforms like Facebook and YouTube leverage similar technology to moderate content at scale, aiming to catch harmful material before it gains traction.

Digital watermarks are another promising development. By embedding invisible markers into AI-generated content, creators can ensure transparency and traceability. These watermarks make it easier to identify synthetic media, holding creators accountable for any misuse.

However, AI detection tools face significant challenges. As technology evolves, those spreading misinformation find new ways to evade detection systems. It’s an ongoing arms race that requires constant updates and innovation in detection methods. The most effective strategy is a hybrid approach combining AI technology and human oversight. While AI excels at quickly processing vast amounts of data, human analysts provide the context and critical thinking needed to make final judgment calls.

The role of public education in fighting misinformation

Technology alone cannot solve the misinformation crisis. Public awareness and education are equally important in building resilience against false narratives. Many people share fake content unintentionally because they lack the skills to identify misleading information. Addressing this gap is essential to reduce the problem at its roots.

Digital literacy must become a core focus in schools, workplaces, and communities. Teaching individuals how to critically evaluate online content, verify sources, and identify emotionally manipulative language can significantly reduce the spread of false information. Simple habits—like checking multiple sources before sharing content—can make a big difference.

Social media platforms also have a responsibility to educate their users. Features such as fact-checking labels, warning notifications on suspicious content, and precise reporting tools empower individuals to think twice before sharing unverified information.

Collaboration with trusted figures—educators, journalists, and community leaders—can enhance these efforts. When credible voices address misinformation, the public is more likely to take it seriously.

Creating a culture of digital responsibility takes time, but it’s one of the most sustainable ways to combat misinformation. People must have the tools and knowledge to navigate an increasingly complex information landscape.

Regulation and ethical AI development

Effective regulation is critical in the fight against misinformation. Governments worldwide are beginning to implement policies requiring transparency around AI-generated content. Measures such as mandatory watermarks on AI-generated images and videos help users identify manipulated media more efficiently.

Striking the right balance is key. Overly strict regulations could stifle innovation and limit free expression, while overly lenient rules risk allowing misinformation to thrive unchecked. Policymakers must collaborate closely with technology experts to design practical and effective regulations.

Tech companies also play a central role. They must establish clear internal guidelines for developing and deploying AI tools. Ethical AI frameworks should prioritise transparency, fairness, and accountability, ensuring these tools are not misused to create harmful content.

Global cooperation is equally important. Misinformation campaigns often transcend borders, making it essential for countries to collaborate on international regulations and share best practices. Initiatives from organisations like the United Nations aim to establish global standards for AI governance.

Independent oversight bodies provide an added layer of accountability. By auditing AI systems and misinformation detection tools, these bodies can ensure compliance with ethical standards and identify areas for improvement.

Looking ahead

The battle against misinformation is far from over. As technology advances, so will the tactics used to spread false information. However, meaningful progress can be made with AI-powered detection tools, public education, transparent practices, and smart regulations.

Everyone has a role—governments, tech companies, educators, and individuals alike. By staying vigilant and proactive, we can create a digital environment where truth prevails over manipulation, and trust is restored in our information systems.