Transparent AI as a foundation for trust and ethics

Explore the crucial role of AI transparency in building trust and ethics in technology, highlighting its importance, challenges, and future trends.

Artificial intelligence (AI) systems, deeply embedded in our daily routines, necessitate a clear understanding of their operational mechanisms. AI transparency refers to the accessibility and clarity of AI systems’ processes, decisions, and utilisation. This transparency is akin to providing a window into AI’s otherwise opaque operations, allowing users and stakeholders to understand the rationale behind AI-generated outcomes. As AI technology becomes increasingly sophisticated, the call for transparency intensifies, ensuring that AI operations are practical, understandable, and trust-inspiring.

Table Of Content

For businesses, the emphasis on AI transparency is not just about technical compliance; it’s about building trust with customers and users. In customer interactions, where AI systems can make or break the customer experience, transparency becomes critical. For instance, when a customer interacts with a chatbot, understanding the basis of its responses or recommendations can significantly affect their trust and satisfaction levels. This level of openness is not merely a technical requirement but a strategic asset that can drive customer loyalty and engagement.

Moreover, transparency in AI is increasingly seen as a cornerstone of ethical AI practices. As AI systems are deployed across various sectors—from healthcare to finance—the implications of their decisions can have significant ethical and social impacts. Transparency ensures these systems operate under scrutiny, making their operations and outputs justifiable and fair. It helps demystify AI technologies, making them less intimidating and more accessible to the general public, thereby fostering a broader acceptance and integration of these technologies into everyday life.

The importance of transparency in AI

The importance of AI transparency is rooted in the need for clarity, fairness, and accountability in AI operations. Clear, transparent AI practices enable users to understand and trust the technology, ensuring that AI systems are used responsibly and ethically. The Zendesk Customer Experience Trends Report 2024 highlights that 65% of CX leaders now view AI as a strategic necessity, not just a technological advantage. This perspective underscores the role of transparency in maintaining operational integrity and strategic relevance in today’s technology-driven markets.

Transparency is particularly vital in preventing and addressing biases within AI systems. In scenarios where AI is used for hiring decisions or credit scoring, the lack of transparency can lead to unfair biases against certain groups, inadvertently reinforcing societal inequalities. Transparent practices help identify and correct these biases, ensuring that AI systems are fair and equitable. Furthermore, transparency in AI decision-making processes reassures users that AI systems operate within ethical bounds, adhering to societal norms and regulatory requirements.

Lastly, transparency in AI fosters a sense of security and trust among users. When businesses openly share how their AI models operate and make decisions, it alleviates potential data misuse or biased decision-making concerns. This open communication is essential when data privacy concerns are at an all-time high. It also positions companies as responsible stewards of technology, enhancing their brand reputation and building stronger customer relationships.

Navigating the complexities of AI transparency

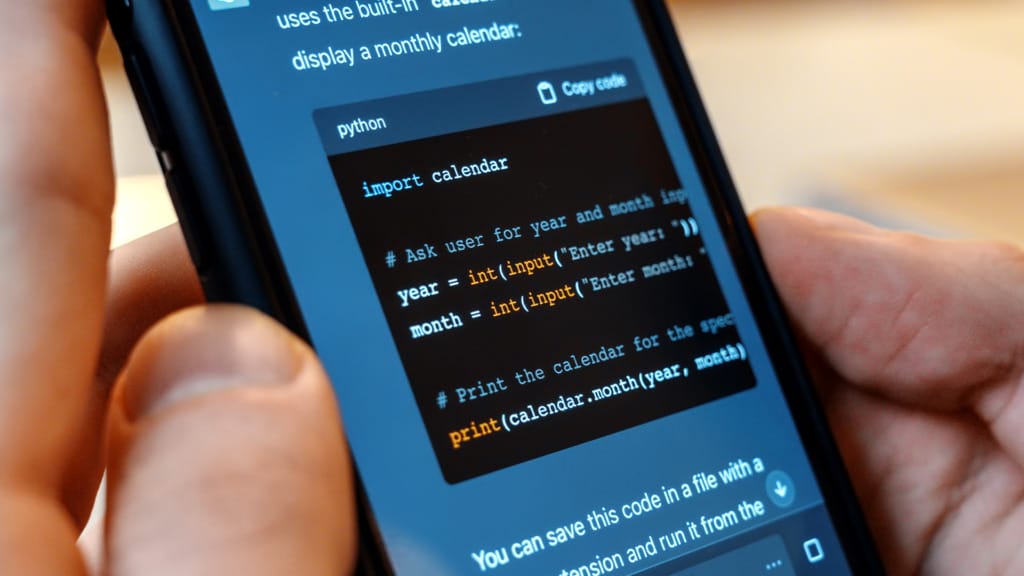

Achieving AI transparency is a complex endeavour involving multiple dimensions, from the technical aspects of AI systems to their societal impacts. The first step is ensuring explainability, which involves making the AI’s decision-making processes transparent and understandable. For example, if an AI system declines a loan application, the applicant should be able to understand the specific factors that influenced this decision. This level of clarity builds trust and allows users to interact with AI systems more effectively.

Interpretability extends beyond explainability, focusing on users’ ability to understand the internal workings of AI systems. This includes the relationships between inputs and outputs and the logic that drives AI decisions. High levels of interpretability are particularly crucial in sensitive applications such as medical diagnostics or criminal justice, where the consequences of AI decisions can be significant. Ensuring these systems are interpretable helps maintain public trust and compliance with ethical standards.

Accountability in AI involves establishing mechanisms to hold AI systems and their operators responsible for the outcomes they produce. This includes setting up regulatory and operational frameworks that can track the performance of AI systems and address any issues that arise. Accountability ensures that AI systems do not operate in a vacuum and that there are consequences for errors or misjudgments, which are essential for maintaining public trust and legal compliance. It also involves continuously monitoring and updating AI systems to adapt to new data and changing conditions, ensuring that AI operations remain transparent over time.

Regulatory and standard-setting frameworks

The regulatory landscape for AI transparency is rapidly evolving to keep pace with technological advancements. Frameworks such as the General Data Protection Regulation (GDPR) in Europe and potential new regulations in other regions aim to set transparency and data privacy benchmarks. These regulations mandate that AI systems explain their decisions, particularly when they impact individual rights. They also require that data used in AI processes is collected and processed transparently and ethically, ensuring user privacy and data security.

Standards and principles set by international bodies like the OECD offer guidelines for responsible AI deployment, emphasising accountability and fairness. These standards foster trust in AI systems by ensuring they adhere to ethical norms and contribute positively to society. They provide a foundation for businesses and governments to develop their policies and practices, which can be tailored to specific cultural and legal contexts.

Implementing these regulatory and standard-setting frameworks involves a collaborative effort among stakeholders, including technology developers, policymakers, and the public. It requires balancing innovation and regulation, ensuring that AI technologies advance while safeguarding ethical and societal values. This balancing act is crucial for fostering an environment where AI can thrive without compromising ethical standards or public trust.

Benefits and future outlook of transparent AI

The benefits of transparent AI are multifaceted, from enhanced user trust to improved regulatory compliance and more effective AI implementations. Transparency in AI fosters trust among users and empowers them to use AI technologies more effectively. When users understand how AI systems work, they are more likely to integrate them into their daily lives and workflows, potentially leading to innovative uses and enhancements.

Transparent AI also plays a critical role in enabling continuous improvement and innovation. By making AI processes visible and understandable, developers and researchers can more effectively identify inefficiencies and biases, driving advancements in AI technology. This iterative process helps ensure that AI systems remain relevant and effective over time, adapting to new challenges and opportunities.

The emphasis on AI transparency is expected to grow, driven by both technological advancements and evolving societal expectations. Tools and methodologies for enhancing AI transparency, such as advanced explainability interfaces and robust ethical audits, will likely become more sophisticated. The regulatory environment will likely evolve, offering clearer guidelines and standards for transparent AI. This dynamic field presents challenges and opportunities for businesses, developers, and policymakers, making ongoing engagement and adaptation essential for harnessing the full potential of transparent AI.