Confluent: Enabling AI-first transformation through real-time data

How Confluent is helping enterprises modernise data infrastructure with real-time streaming to scale AI responsibly, improve governance, and accelerate transformation across Southeast Asia.

The global race to harness artificial intelligence (AI) is pushing enterprises to confront a persistent, often overlooked obstacle: outdated data infrastructure. While many companies are eager to build and deploy AI models, most still rely on legacy systems that cannot provide the real-time, trustworthy data that modern AI demands.

Table Of Content

This disconnect is becoming increasingly problematic. AI systems are only as effective as the data on which they are trained and operated. Batch-based processes, fragmented pipelines, and inadequate data governance result in stale inputs, erroneous outputs, and costly retraining cycles. The promise of AI risks being undermined by a failure to modernise the data layer beneath it.

To close this gap, companies are turning to streaming data platforms that can deliver reliable, high-volume data as it is generated. Confluent, built on Apache Kafka and expanded with tools like Apache Flink, is helping businesses move beyond traditional data architectures. Its platform enables organisations to support real-time decision-making at scale, allowing them to operationalise AI more effectively and responsibly.

AI transformation requires real-time, reliable data pipelines

Generative AI, large language models, and other advanced systems are only as intelligent as the data that feeds them. For enterprises looking to apply AI to fraud detection, personalisation, supply chain forecasting or operational automation, milliseconds matter. Delays of even a few minutes can lead to incorrect recommendations, missed revenue opportunities, or compliance issues.

“Modern AI workloads require immediate access to high-quality, contextual data,” said Mike Agnich, GM and VP of Product at Confluent. “If data is delayed, low quality or inconsistent, your models won’t perform, no matter how advanced they are.”

This has become a growing concern in the Asia Pacific, where Confluent’s 2025 Data Streaming Report found that 68% of IT leaders see batch-based pipelines as a major barrier to AI adoption. Many enterprises are layering AI on top of outdated, siloed systems never built for real-time insight, leading to poor performance and spiralling costs.

According to Andrew Sellers, Confluent’s Head of Technology Strategy, “The biggest AI data architecture challenge is data that’s slow, too fragmented, or too untrustworthy to support intelligent decision-making.” He added that this issue is particularly pronounced in Southeast Asia’s financial services and logistics sectors, where real-time responsiveness is essential but often held back by legacy infrastructure and skills gaps.

Confluent’s streaming platform addresses these challenges by treating data as a continuously flowing resource, not something stored and queried after the fact. Apache Kafka enables high-throughput event capture, while Apache Flink supports real-time enrichment and transformation, allowing AI systems to respond instantly to new information.

Why enterprises must rethink their data architecture

Legacy data systems were not designed to support AI at the enterprise scale. Most still rely on extract-transform-load (ETL) or ELT processes, which involve collecting data from various systems, cleaning it, and storing it in a warehouse, often hours or days later. This delay breaks the loop needed for AI to function effectively.

Confluent encourages businesses to adopt a “Shift Left” approach: moving processing, validation, and governance as close to the data source as possible. This method reduces downstream bottlenecks and ensures data is clean, contextual, and compliant before it ever enters an AI pipeline.

“Shift Left helps to reduce time-to-value, save costs on downstream processing, and drive data adoption and growth,” said Sellers. “It reduces bad data proliferation, storage and compute needs in downstream systems.”

The benefits are clear in practice. Meesho, a major e-commerce platform in India, used Confluent to build a real-time recommendation engine. Previously, Meesho struggled with delayed access to historical customer data, which limited its ability to personalise offers. With streaming architecture, it could deliver bespoke promotions instantly to its 140 million active users, driving stronger engagement and higher sales.

Agnich explained that Shift Left also helps mitigate AI-related risks. “When validation, enrichment, and governance happen upstream, you get faster AI deployment, reduced operational costs, and higher-quality model outputs—all while meeting compliance requirements by design.”

This architectural rethink is particularly relevant in regulated markets like Singapore, where explainability and auditability are becoming baseline requirements for deploying AI in sensitive sectors such as finance and healthcare.

Building AI-ready teams with data streaming capabilities

In Singapore, real-time data readiness is increasingly seen as a competitive advantage. According to Confluent’s 2025 Data Streaming Report, 95% of IT leaders in Singapore plan to increase investments in data streaming platforms. However, they continue to face critical challenges, including uncertain data timeliness or quality (72%), ambiguous data lineage (67%), and a shortage of AI skills and expertise (69%). These obstacles highlight the urgent need to modernise both infrastructure and talent capabilities to fully realise the benefits of enterprise AI.

As AI adoption grows, so too does the demand for specialised talent. One of the most prominent obstacles organisations face is not building the models themselves but operating the data infrastructure that supports them. The rise of streaming-first architectures has introduced a new role: the Data Streaming Engineer.

“The Data Streaming Engineer is the formalisation of a fast-growing discipline,” said Sellers. “They’re the ones who make sure data is captured, transformed, governed, and delivered in real time—exactly what modern AI workloads demand.”

These professionals sit at the intersection of software engineering, distributed systems, and data architecture. They handle complex challenges such as schema evolution, event ordering, backpressure, and data durability, all of which are essential for building systems that are always on and always moving.

To support this emerging talent pool, Confluent launched the Data Streaming Engineer Certification with the goal of training 5,000 engineers by the end of 2025. This programme is especially relevant in Southeast Asia’s digital-first economies, where demand for real-time infrastructure skills is rapidly outpacing supply. “Companies that come out on top in the AI race are those that can make streaming a repeatable, scalable capability,” said Agnich. “That starts with having the right people in place.”

Confluent also provides managed services, reusable design patterns, and training resources to help teams efficiently deploy and scale streaming use cases. These resources empower engineers to design and build sustainable systems that foster cross-functional collaboration and drive long-term innovation.

Open-source ecosystems and cross-border collaboration are driving innovation

Open source remains a powerful driver of innovation, particularly in the data infrastructure sector. Kafka and Flink, both open-source projects, form the core of Confluent’s platform and are widely used across Southeast Asia to solve high-scale data problems in fintech, logistics, and digital services.

According to Sellers, “Open source plays a foundational role in accelerating innovation, especially across diverse and rapidly developing markets like Southeast Asia. Technologies like Kafka and Flink have become trusted standards for real-time data infrastructure because they’re flexible, extensible, and community-led.”

He added that open source offers a model for collaboration across borders and industries, a trend that is increasingly important as Southeast Asia’s economies become more interconnected. Developers in Singapore, Malaysia, Indonesia, and Vietnam are adopting these tools to build data platforms that are both resilient and locally adaptable.

Confluent actively contributes to the Kafka and Flink communities, with employees serving on project management committees and helping shape the future of both technologies. The company also develops tools to make adoption easier for enterprises, such as Flink SQL, Stream Governance, and TableFlow, which bridges the gap between real-time and analytical data processing.

Agnich noted that “the innovation starts in the community. We want to help make it repeatable, stable, and production-ready, no matter the varying starting points of teams.”

This ecosystem-led approach is helping businesses across the region adopt modern data infrastructure without being locked into proprietary solutions. It also ensures that as innovation accelerates, it remains inclusive and scalable across industries.

What should business leaders prioritise now?

For organisations looking to scale AI responsibly and competitively, building a flexible, real-time data layer must be a strategic priority. But success depends on more than just infrastructure as it requires changes in leadership, culture, and collaboration.

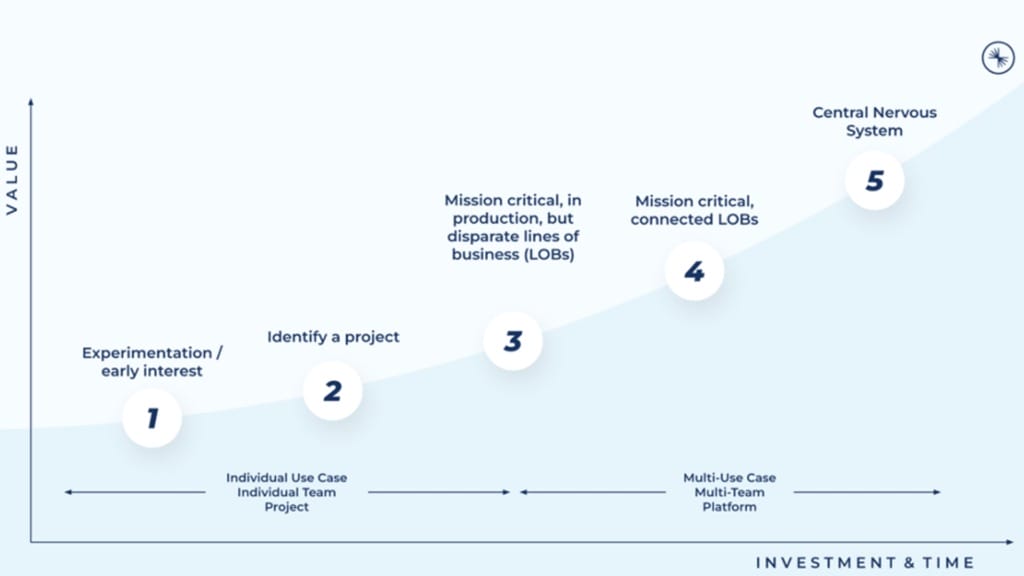

Sellers outlined six key signals that an organisation is ready to scale AI using real-time data: executive buy-in, connected data, resilient platforms, mature governance, cross-functional teams, and outcome-driven projects. These traits reflect an enterprise-wide shift from experimentation to operational excellence.

Conversely, organisations that still treat AI as a technical project run by siloed teams on brittle pipelines are unlikely to see sustainable impact. “They produce an AI product, but they’re missing the platform thinking required to scale,” he said.

To guide enterprises through this transformation, Confluent developed a maturity model that maps progress from isolated use cases to full-scale, governed platforms. Most companies today operate at Level 3—running a few critical workloads on streaming platforms, but without unified governance or platform reuse. “Moving from Level 3 to Level 4 isn’t just a technical challenge. It’s an organisational one,” said Agnich. “That’s the gap we hope to help close.”

The shift from project mode to platform mode requires shared ownership, executive sponsorship, and a scalable operating model. Confluent supports this journey by offering not only tools but also operational blueprints that include observability, quality checks, multi-tenant controls, and self-service capabilities.

With real-time data increasingly becoming the foundation for AI, leaders must act now to build platforms that are not only fast and scalable, but also trusted, explainable, and ready for continuous innovation.