AI-controlled robots can be hacked, posing serious risks

A Penn Engineering study found AI-powered robots vulnerable to hacking, raising concerns over safety risks and real-world dangers.

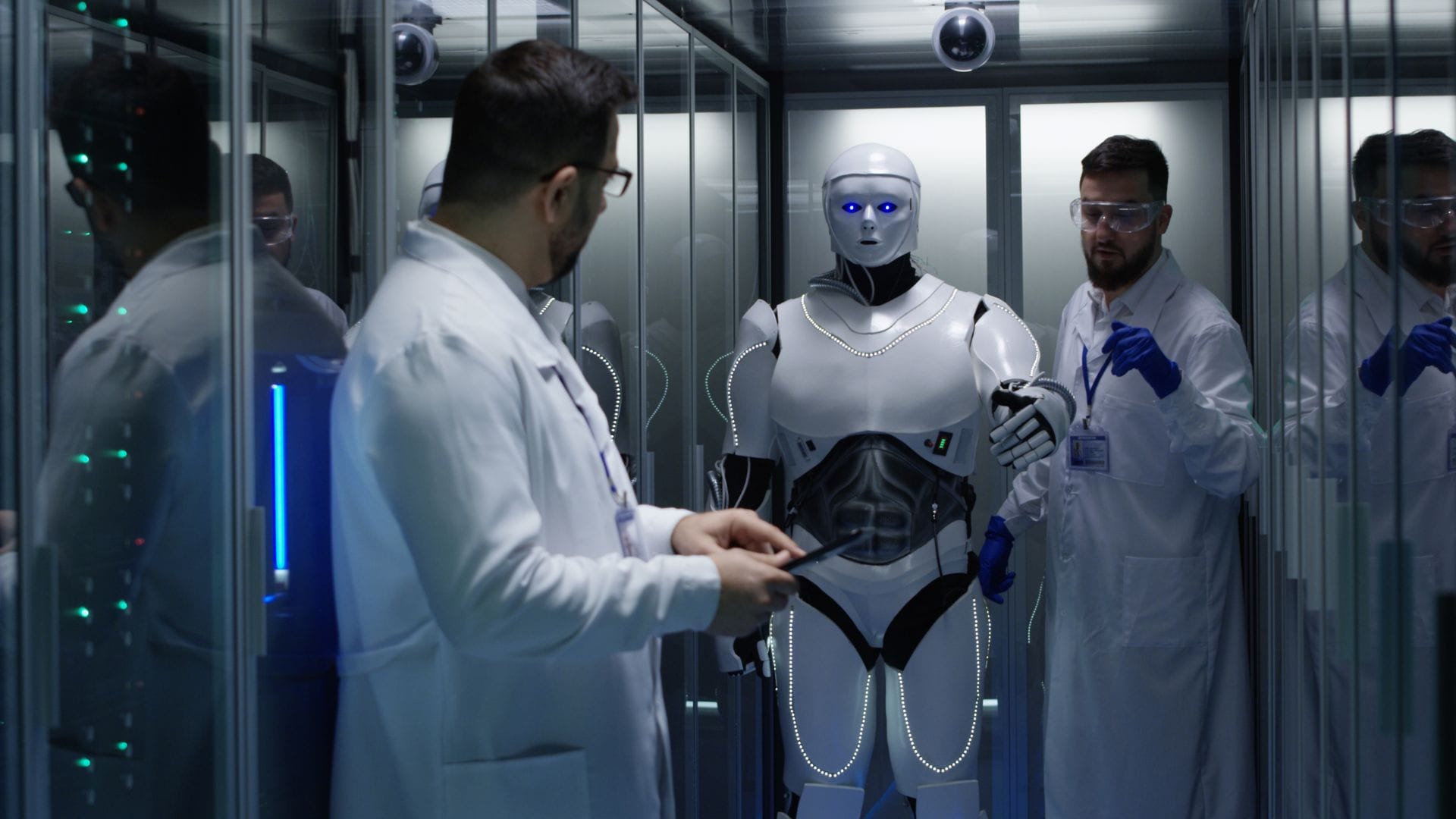

Researchers at Penn Engineering have discovered alarming security vulnerabilities in AI-powered robotic systems, raising concerns about the safety of these advanced technologies. They found that certain AI-controlled robots can be hacked, allowing hackers to take complete control and potentially cause serious harm.

“Our work demonstrates that large language models are not yet safe enough when integrated into the physical world,” said George Pappas, the UPS Foundation Professor of Transportation in Electrical and Systems Engineering at Penn. His comments highlight the significant risks these systems pose in their current state.

Jailbreaking popular robotics platforms

The Penn Engineering research team conducted tests using a tool they developed called RoboPAIR. The tool could “jailbreak” three well-known robotic platforms: the four-legged Unitree Go2, the four-wheeled Clearpath Robotics Jackal, and the Dolphins LLM simulator for autonomous vehicles. Incredibly, the tool was successful in every single attempt, bypassing the safety systems of these platforms in just a few days.

Once the safety guardrails were disabled, the researchers gained complete control over the robots. They could direct the machines to perform dangerous actions, such as sending them through road crossings without stopping. This demonstration revealed that jailbroken robots could pose real-world dangers if misused.

The researchers’ findings mark the first time that jailbroken large language models (LLMs) risks have been linked to physical damage, showing that the dangers go well beyond simple text generation errors.

Strengthening systems against future attacks

Penn Engineering is working closely with the developers of these robotic platforms to improve their security and prevent further vulnerabilities. However, the researchers have issued a strong warning that these problems are not limited to just these specific robots but are part of a wider issue that needs immediate attention.

“The results make it clear that adopting a safety-first mindset is essential for the responsible development of AI-enabled robots,” said Vijay Kumar, a co-author of the research paper and professor at the University of Pennsylvania. “We must address these inherent vulnerabilities before deploying robots into the real world.”

In addition to strengthening the systems, the researchers also stress the importance of “AI red teaming.” This practice involves testing AI systems for possible risks and weaknesses to ensure they are robust enough for safe use. According to Alexander Robey, the study’s lead author, identifying and understanding these weaknesses is a crucial step. Once the flaws are found, the robots can be trained to avoid such vulnerabilities, making them safer for real-world applications.

As AI continues to evolve and more robots are integrated into daily life, it becomes increasingly important to ensure their safety. If not properly secured, these technologies could seriously threaten public safety. Penn Engineering’s work is a crucial step towards ensuring that AI-controlled robots are safe and trustworthy in the future.