OpenAI takes steps to protect children online

OpenAI establishes a Child Safety team to address concerns over children's use of AI tools and regulations on usage in education.

OpenAI has established a new team dedicated to ensuring the safety of children using its artificial intelligence (AI) tools. The move comes in response to concerns raised by activists and parents regarding the potential misuse or abuse of AI technology by minors.

In a recent job listing, OpenAI disclosed the formation of a Child Safety team tasked with collaborating with internal groups and external partners to develop processes and protocols for managing incidents related to underage users. The team is currently seeking to recruit a child safety enforcement specialist to oversee the implementation of OpenAI’s policies concerning AI-generated content, particularly content deemed sensitive or relevant to children.

With the prevalence of regulations like the U.S. Children’s Online Privacy Protection Rule, which governs children’s online activities and data privacy, it is not surprising that OpenAI is investing resources in child safety measures. While OpenAI’s current terms of use require parental consent for users aged 13 to 18 and prohibit access for children under 13, the establishment of the Child Safety team reflects a proactive approach to addressing potential risks associated with underage AI usage.

The formation of this team follows OpenAI’s recent partnership with Common Sense Media to develop guidelines for child-friendly AI usage and its acquisition of its first education customer. These initiatives underscore OpenAI’s commitment to responsible AI development and its recognition of the need to mitigate risks associated with minors’ use of AI technology.

Concerns raised over children’s use of AI tools

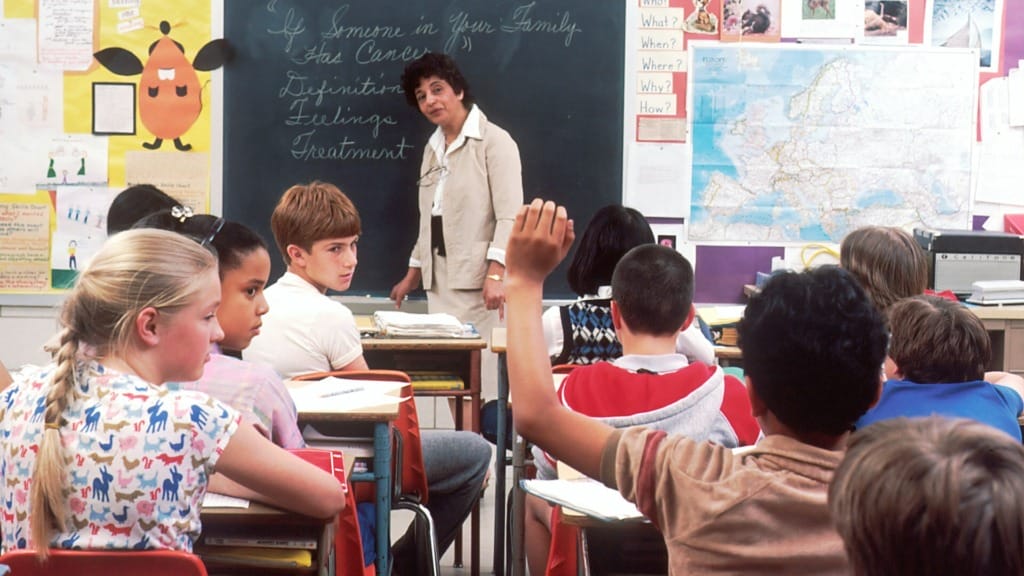

The increasing reliance of children and teenagers on AI tools for academic assistance and personal support has raised concerns among educators and policymakers. A poll conducted by the Center for Democracy and Technology revealed that a significant portion of young users have turned to AI platforms like ChatGPT to address issues such as anxiety, social conflicts, and family problems.

However, the growing popularity of AI among minors has also prompted scrutiny and apprehension. Last summer, several educational institutions imposed bans on ChatGPT due to concerns regarding plagiarism and dissemination of misinformation. While some bans have been lifted, lingering doubts remain about the potential negative impact of AI on young users.

According to surveys conducted by organisations such as the U.K. Safer Internet Centre, a considerable proportion of children have reported encountering instances of misuse of AI tools by their peers, including the creation of false information or images intended to harm others.

In response to these challenges, OpenAI has published documentation providing guidance to educators on the appropriate use of AI tools in classrooms. Acknowledging the potential for AI-generated content to be unsuitable for certain audiences, OpenAI advises caution when exposing children to its technology, even among those who meet the minimum age requirements.

Calls for regulation and guidelines on AI use in education

International organisations such as UNESCO have called for stricter regulation of AI usage in education to safeguard children’s well-being and privacy. UNESCO has advocated for the implementation of age restrictions and data protection measures to mitigate the potential risks associated with AI technology in educational settings.

Audrey Azoulay, UNESCO’s director-general, emphasised the need for public engagement and regulatory frameworks to ensure the responsible integration of AI into education. While recognising the potential benefits of generative AI for human development, Azoulay cautioned against overlooking the potential harms and prejudices that could arise without adequate safeguards and regulations in place.