Microsoft ventures into custom AI chips for Azure data centres

Microsoft unveils custom AI and CPU chips for Azure, signalling a shift in cloud and AI technology, with implications for future AI applications and cost efficiencies.

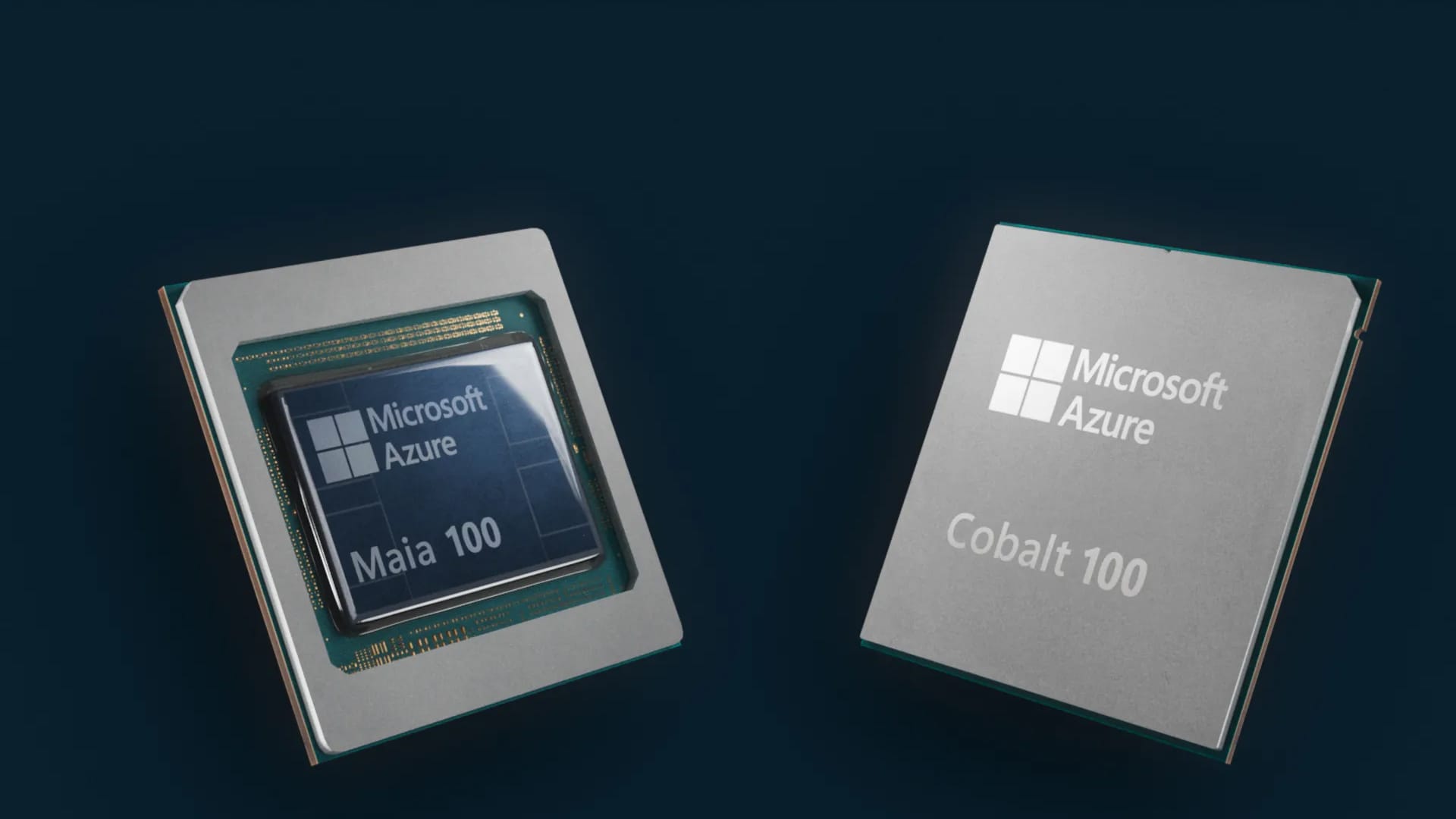

In a landmark move, Microsoft has unveiled its own custom AI chip, aimed at reducing dependence on Nvidia’s technology for training large language models. This innovation, coupled with Microsoft’s development of an Arm-based CPU, is set to enhance the capabilities of its Azure data centres, preparing the company and its clients for an AI-centric future.

Table Of Content

The rise of Azure’s custom silicon chips

The Azure Maia AI chip launch and the Azure Cobalt CPU in 2024 are pivotal in Microsoft’s hardware evolution. The demand for Nvidia’s H100 GPUs, currently a staple in generative image tools and large language models, has skyrocketed, with prices soaring to over US$40,000 on eBay. Rani Borkar, head of Azure hardware systems and infrastructure, highlights Microsoft’s extensive experience in silicon development, tracing back to collaborations on Xbox and Surface devices. This rich history underpins the new Azure Maia AI chip and Azure Cobalt CPU, both developed in-house to revolutionise cloud server performance and efficiency.

Innovations in cloud and AI technology

Microsoft’s Azure Cobalt CPU, a 128-core chip based on Arm Neoverse CSS design, is customised to support Azure’s general cloud services. Borkar emphasises the chip’s focus on performance and power management. Initial tests show a 40% performance improvement over current commercial Arm servers. Microsoft’s Maia 100 AI accelerator, designed for cloud AI workloads, is pivotal in training large language models and is integral to the company’s collaboration with OpenAI.

Manufactured using a 5-nanometer TSMC process, the Maia chip contains 105 billion transistors, supporting sub 8-bit data types for enhanced model training and inference times. The chip’s liquid cooling system allows for higher server density and efficiency, fitting into Microsoft’s current data centre footprint.

Future of Azure’s AI capabilities

Microsoft is testing the Maia 100 on applications like GPT 3.5 Turbo, with plans for broader deployment. However, specific performance benchmarks remain undisclosed. Borkar reiterates the importance of partnerships with Nvidia and AMD, viewing Microsoft’s advancements as complementary to existing cloud AI infrastructure. The naming convention of the Maia 100 and Cobalt 100 hints at the possibility of future iterations, reflecting the rapid evolution of AI technology.

Implications for AI cloud services

By introducing these custom chips, Microsoft aims to optimise performance and offer diverse infrastructure choices to customers, potentially lowering AI costs. While pricing details for these new server technologies are not yet available, the rollout of Microsoft’s Copilot for 365, priced at US$30 per user per month, demonstrates the company’s commitment to making AI more accessible. The impact of the Maia chip on Microsoft’s AI-powered services, including the newly rebranded Bing Chat, will be closely watched as the company advances in the AI domain.